반응형

텐서플로우 객체를 만들고 이름을 출력하는 방법

import tensorflow as tf

hello = tf.constant("Hi, TensorFlow")

sess = tf.Session()

print(sess.run(hello))

텐서플로우 객체 생성후 사용법

import tensorflow as tf

node1 = tf.constant(3.0, tf.float32)

node2 = tf.constant(4.0)

node3 = tf.add(node1,node2)

print("node1:",node1, "node2:", node2)

print("node3: ",node3)

객체이기 때문에 프린터 할수 없다

import tensorflow as tf

node1 = tf.constant(3.0, tf.float32)

node2 = tf.constant(4.0)

node3 = tf.add(node1,node2)

#that is no print

#print("node1:",node1, "node2:", node2)

#print("node3: ",node3)

sess = tf.Session()

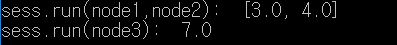

print("sess.run(node1,node2): ", sess.run([node1,node2]))

print("sess.run(node3): ", sess.run(node3))

그래프를 빌드한후 sess.run을 통해서 그래프를 실행시킨다.

import tensorflow as tf

a = tf.placeholder(tf.float32)

b = tf.placeholder(tf.float32)

adder_node = a + b

sess = tf.Session()

#feed_dict is that vlaue is returned

print(sess.run(adder_node, feed_dict={a: 3, b:4.5}))

print(sess.run(adder_node, feed_dict={a: [1,3],b:[2,4]}))

feed_dict는 비어잇는 노드 a b에게 값을 넘겨준다. 배열 형태로 넘겨줄수도 있다.

대표사진 삭제

사진 설명을 입력하세요.

Build graph using TF operations

linear regression 구현

import tensorflow as tf

x_train = [1, 2, 3]

y_train = [1, 2, 3]

w = tf.Variable(tf.random_normal([1]), name='weight')

b = tf.Variable(tf.random_normal([1]), name ='bias')

# Our hypothesis XW+b

hypothesis = x_train * w + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - y_train))

# Minimize

learning_rate = 0.01

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

train = optimizer.minimize(cost)

# Launch the groph in a session.

sess= tf.Session()

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

# Fit the Line

for step in range(2001):

sess.run(train)

if step & 20 ==0:

print(step, sess.run(cost), sess.run(w), sess.run(b))

tf.random_normal([1]) // 랜덤한 1차원 형식값 리턴

reduce_mean() //평균

train = optimizer.minimize(cost) // cost값을 최소화 한다.

값을 내가 feed_dict로 정해줄수도 있다.

import tensorflow as tf

x = tf.placeholder(tf.float32, shape=[None])

y = tf.placeholder(tf.float32, shape=[None])

w = tf.Variable(tf.random_normal([1]), name='weight')

b = tf.Variable(tf.random_normal([1]), name ='bias')

# Our hypothesis XW+b

hypothesis = x * w + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - y))

# Minimize

learning_rate = 0.01

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

train = optimizer.minimize(cost)

# Launch the groph in a session.

sess= tf.Session()

# Initializes global variables in the graph.

sess.run(tf.global_variables_initializer())

# Fit the Line

for step in range(2001):

cost_val, w_val, v_val, _ = sess.run([cost, w, b, train],

feed_dict = {x: [1, 2, 3, 4, 5],

y: [2.1, 3.1, 4.1, 5.1, 6.1]})

if step & 20 ==0:

print(step, cost_val, w_val, v_val)반응형

'개인공부' 카테고리의 다른 글

| 안드로이드 : 알림창 and 이벤트 발생 (0) | 2020.07.08 |

|---|---|

| tensorflow를 이용해서 binary 값 데이터 학습하기 (0) | 2020.07.04 |

| C++::BackPropagation (역전파) (0) | 2020.07.04 |

| C++::Artificial Neural Network(인공신경망) (0) | 2020.07.04 |

| 안드로이드 : 계산기 어플 만들기 (0) | 2020.07.04 |